There’s a quiet moment that happens before every good video: the pause between words on a page and images in motion.

When I work with script to video tools, I’m always listening for that moment. I’m asking: Does this tool understand the feeling behind the lines, or is it just decorating words with moving pictures?

In this guide, I’ll walk you through how script-to-video really works, how it differs from basic text-to-video, which tools feel emotionally reliable right now, and how to format your scripts so the AI doesn’t lose your story’s heartbeat. I’ll keep things clear and practical, but always grounded in what matters most: light, pacing, expression, and emotional coherence.

What Is Script-to-Video?

When I say script-to-video, I don’t just mean “type a sentence and get a clip.” Script-to-video is a workflow where you give an AI a structured script, with scenes, dialogue, and sometimes camera directions, and it tries to turn that into a sequence of shots that feels like a real video.

Instead of a single floating idea, the AI receives:

- who is talking

- what they’re saying

- where they are

- what’s happening in each moment

The goal isn’t only to generate pretty frames. It’s to create a visually and emotionally connected sequence where:

- lighting feels stable and intentional, not changing at random

- characters look like the same person from shot to shot

- pacing follows your story’s rhythm (not the AI’s guess)

- cuts feel motivated by emotion or action, not by confusion

A good script-to-video result feels like the AI has quietly read your script, taken a breath, and then tried to honor its emotional temperature, rather than just showing off what it can render.

Script-to-Video vs Text-to-Video: Key Differences

People often mix script-to-video with text-to-video, but they behave very differently.

Text-to-video usually means:

- you type one prompt like “a girl walking through a neon city at night”

- the AI makes a short clip

- there’s no sense of scenes or story progression

Visually, text-to-video can look impressive, but it often feels like a single visual mood rather than a narrative. The motion may be pretty, yet emotionally shallow, like a music video loop with no inner change.

Script-to-video is closer to how a director reads pages. The AI tries to:

- respect scene boundaries

- shift locations and moods with the script

- keep character identity more stable over time

- respond to dialogue with matching expressions and body language

Where text-to-video is about “a moment”, script-to-video aims for “a sequence of moments”.

In practice, this means:

- YouTubers can go from written explainer to paced visuals.

- TikTok storytellers can turn skits or voiceovers into cut scenes.

- Brands can move from storyboard-like scripts to draft videos.

For you as a creator, script-to-video matters when you want your video to feel like it has chapters, beats, and emotional shifts, not just a single aesthetic loop.

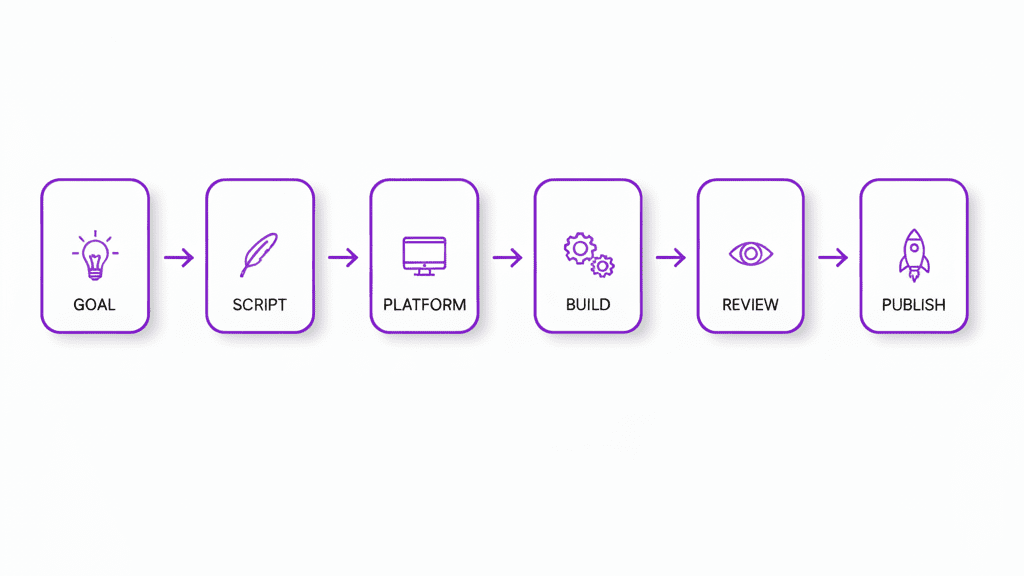

How Script-to-Video Works (5-Step Workflow)

When I test script-to-video tools, I see the same quiet structure underneath most of them. It isn’t usually visible in the interface, but the process feels like this.

Step 1 – Script Formatting & Scene Breakdown

The first thing the AI needs is clarity. If the script is a wall of text, the results often look confused: characters drift, the background keeps changing, and the pacing feels rushed.

So I start by gently structuring the script:

- Break it into scenes using clear markers like:

SCENE 1 – INTERIOR – BEDROOM – MORNING- Put dialogue on its own lines with speaker names:

EMMA: I don't think I'm ready for this.- Add clean, short description lines:

She sits on the edge of the bed, light from the window wrapping softly around her.

When I do this, I notice the AI’s visuals breathe better. The cuts become more intentional. The light feels less chaotic. There’s less visual “panic” in the frame.

Step 2 – Shot List Generation

Some tools will automatically split scenes into shots: sometimes I have to guide them.

A shot list is just a simple breakdown of how we see each moment, for example:

- Wide shot – Emma in her bedroom, early morning light

- Medium shot – Emma from the side, hands fidgeting in her lap

- Close-up – Emma’s eyes, unsure but hopeful

Even if the platform doesn’t show you a formal shot list, adding hints inside your script like “close-up on her hands” or “wide shot of the empty hallway” often leads to more emotionally tuned results.

When the AI has this gentle guidance, cuts feel less random. You get fewer sudden angle changes that feel like the camera is anxious.

Step 3 – Visual Asset Mapping

Next, the AI silently decides: What should this line look like?

In this phase, the tool is matching:

- Characters → consistent face, hairstyle, clothing

- Locations → bedroom, street, office, café

- Props → phone, coffee cup, notebook

- Atmosphere → warm sunrise, cool office light, moody night streets

When this step goes well, the video feels grounded:

- skin texture remains believable instead of turning plasticky between shots

- backgrounds don’t “melt” or pulse strangely behind the subject

- colors stay within a coherent palette (for example, all shots share a warm, gentle tone)

When it goes poorly, I see things like:

- outfits changing mid-conversation

- eyes that hesitate or slightly misalign between frames

- a background that seems to breathe in a way that doesn’t feel natural

That’s usually a sign the tool needs a simpler, clearer script, or fewer characters.

Step 4 – AI Video Generation

This is the part everyone focuses on, but I see it as just one step.

Here, the tool turns all those instructions into actual motion. What I’m watching for is not just sharpness, but emotional stability:

- Does the light stay believable from the start of the shot to the end?

- Does the motion feel intentional, or does the camera drift nervously?

- Do faces hold their structure when they turn, or do they briefly dissolve?

A good script-to-video output here feels like the camera has confidence. Movements are steady: it doesn’t wobble unless it’s supposed to. The model doesn’t become shy in darker scenes or overexposed in bright ones.

When it struggles, fast motion becomes smeared, fingers twitch, or hair jitters at the edges. It’s not a failure, just a sign to slow your scenes down and favor calmer actions.

Step 5 – Assembly & Post-Edit

Most tools give you either:

- a single stitched video, or

- a series of clips to arrange on a timeline.

This final step is where your eye matters most.

In post-edit, I:

- trim any frames where faces briefly distort

- soften jump cuts that feel too abrupt emotionally

- align shots to the rhythm of the voiceover or dialogue

- gently correct color so the whole piece shares one emotional temperature

Even a quick pass in a basic editor can turn “interesting AI output” into something that feels like a real, intentional piece of video storytelling.

Best AI Tools for Script-to-Video (2025 Comparison)

There are many tools emerging, but a few have a clear visual personality. Here’s how I experience them, through a cinematographer’s eyes rather than a technician’s.

Runway Gen-4.5 – Best for Precision Control

When I feed a structured script into Runway’s newer generation, the results often feel deliberate.

Visually, I notice:

- strong respect for framing when I hint at shots (close-up, wide, over-the-shoulder)

- relatively stable character identity across short sequences

- lighting that usually stays coherent within a scene, especially in natural light setups

It can struggle a little with very fast action or chaotic crowds, but for YouTube explainers, short narrative pieces, and social content where you want a clear, controlled look, it behaves like a careful cinematographer.

Kling 2.6 – Best for Long-Form Scripts

Kling‘s more recent builds tend to feel more comfortable with longer scripts and extended motion.

What I see visually:

- smoother continuity over longer durations

- fewer jarring changes in background from shot to shot

- motion that, while not perfect, feels more patient and less jittery

If you’re working on talking-head storytelling, educational content, or longer narrative shorts, Kling 2.6 can offer a sense of story persistence. It still struggles a bit with subtle facial expression shifts over many scenes, but with gentle pacing, it holds together.

Sora 2 – Best for Cinematic Quality

From the previews and early tests I’ve seen, Sora 2 leans toward cinematic richness.

The frames often have:

- layered atmospheric depth (haze, reflections, soft background texture)

- lighting that feels emotionally tuned, sunset warmth, cold office fluorescents, dreamy nighttime streets

- motion that reads as naturally weighted: footsteps, wind, fabric

Where it can be fragile is in fine detail under stress: very busy scenes, rapid camera swoops, or complex hand interactions. It tries hard, but sometimes it lacks emotional subtlety in the micro-expressions if too much is happening at once.

For cinematic openings, short mood pieces, brand intros, or poetic storytelling, Sora 2 can feel closest to traditional filmmaking aesthetics.

Script Format That AI Understands Best

The way you write your script shapes how the AI sees your world. These three formats help it feel less confused and more visually confident.

Dialogue Format

Keep dialogue simple and clearly labeled:

EMMA: I don't think I'm ready for this.

JAMES: You don't have to be. Just take the first step.Why it helps visually:

- the AI knows who should be on screen

- it can guess whose face needs the emotional focus

- timing of mouth movement and expression becomes easier to align

Avoid stacking multiple speakers in one block of text. That’s when I see eyes hesitate or lip-sync drift.

Scene Description Format

Give each scene a brief, visual description:

SCENE 1 – INTERIOR – BEDROOM – MORNING

Soft light from a single window. Emma sits at the edge of her bed, the room quiet and slightly messy.Use sensory hints the AI can translate into visuals:

- light (soft, harsh, warm, cool)

- space (small, open, cluttered, minimal)

- mood (tense, calm, hopeful, lonely)

You don’t need long paragraphs. Two or three grounded lines per scene are enough. Too many adjectives can actually make the visuals feel uncertain.

Camera Direction Format

Adding light camera cues can gently guide composition:

CLOSE-UP on Emma's hands gripping the bedsheet.

WIDE SHOT of the empty hallway outside her door.

SLOW PUSH-IN on Emma as she finally stands up.These hints help the AI:

- decide when to move in close for emotion

- keep some shots wide so the world feels real

- avoid constant mid-shots, which can flatten the visual rhythm

Used sparingly, camera directions bring an emotional cadence to the final video, like breath in and breath out.

Copy-Paste Prompt Templates for Script-to-Video

Here are some gentle starting points you can adapt. Replace the brackets with your own details.

- Narrative YouTube Short (dialogue-based)

“Convert this script to a short cinematic video.

Style: soft natural light, gentle contrast, realistic skin texture, stable character identity.

Focus on: emotionally clear facial expressions, smooth cuts, steady camera.

Keep the pacing calm but engaging.

Script:

[PASTE YOUR SCRIPT HERE]

“

- TikTok Storytelling Video (vertical)

“Turn this script into a vertical story video.

Keep one main character consistent. Use close-ups and medium shots.

Style: warm color palette, soft light, minimal background distractions.

Make the motion stable and avoid fast chaotic movements.

Script:

[PASTE YOUR SCRIPT HERE]

“

- Explainer / Tutorial with B-Roll

“Convert this script into a video with a mix of talking-head style shots and simple illustrative B-roll.

Style: clean, bright, natural lighting, soft shadows, clear composition.

Use gentle camera motion and clear scene changes.

Prioritize readability of text or objects shown.

Script:

[PASTE YOUR SCRIPT HERE]

“

You can always add small emotional notes like “the mood is hopeful and calm” or “the atmosphere is quiet and introspective” to help the AI choose the right visual temperature.

Troubleshooting: Pacing, Cuts & Consistency Issues

When script-to-video tools misbehave, they rarely say why. I read the problems through the images themselves.

Problem: Pacing feels rushed or chaotic

- What I see: shots cut too quickly, expressions don’t have time to land.

- Gentle fix:

- shorten your script or split it into more scenes

- add lines like “hold on this moment for a second”

- reduce unnecessary dialogue so visuals can breathe

Problem: Cuts feel random

- What I see: angles jump without emotional logic.

- Gentle fix:

- add simple cues: “close-up here”, “wide shot here”

- group lines that belong in the same shot

- avoid mixing many locations in a single paragraph

Problem: Character looks different between shots

- What I see: face shape shifts, hair changes, eyes hesitate.

- Gentle fix:

- keep the number of main characters small

- describe the character once, clearly, then repeat their name

- use phrases like “same character as before with the same outfit and hairstyle”

Problem: Background feels like it’s “breathing” or melting

- What I see: walls, objects, or textures drift strangely.

- Gentle fix:

- choose simpler backgrounds in your descriptions

- avoid too many moving elements in one scene

- prioritize one or two key props instead of clutter

Often, calming the script calms the visuals. When the text is clear and unhurried, the images follow.

FAQ (Schema-ready):

Can AI automatically split my script into scenes?

Yes, many tools can, but I don’t fully rely on them. When the AI guesses scene breaks, it sometimes cuts in emotionally odd places. I prefer to mark scenes myself with clear headings so the pacing feels more intentional.

What script format works best for AI video generation?

A simple mix of scene headings, short visual descriptions, and cleanly labeled dialogue works best. You don’t need complex formatting, just enough structure so the AI knows who is speaking, where we are, and what the emotional moment looks like.

How long does it take to convert a 5-page script into video?

For most current tools, generating a first pass from a 5-page script can take anywhere from a few minutes to around half an hour, depending on resolution and length. I always add extra time for gentle post-editing, trimming odd frames, and adjusting color so the whole piece shares one consistent emotional atmosphere.

If you treat script-to-video as a quiet collaboration instead of a magic button, it becomes a surprisingly tender assistant. Let the tools handle the first draft of your visuals, but keep your eye on the light, the rhythm, and the small emotional pauses in the frame, that’s where your story really lives.