Last month, I listened to an AI voice read a bedtime story to my niece. She didn’t ask if it was real. That’s when I knew something had shifted.

Finding the best AI voice generator in 2025 isn’t about specs or features anymore—it’s about whether the voice makes you lean in or pull away. I’ve spent the past three months testing every major platform, listening for the small human moments: the breath before a reveal, the softness in a vulnerable sentence, the way natural speech holds space.

According to recent analysis from Zapier’s comprehensive testing, the best AI voice generators now produce speech that sounds “natural and realistic, almost (almost!) as if a real person is saying the words.” But which one truly delivers on that promise?

What Makes an AI Voice Generator “Best” in 2025

The question “what is the best ai voice generator” depends entirely on what your ears need.

I listen for four quiet truths:

Emotional presence — Does the voice hesitate in the right places? A YouTuber hit 6,000 subscribers and 8 million views in three months using only AI voiceovers, proving that when done right, audiences don’t resist synthetic voices—they respond to them.

Texture and breath — Real voices have micro-imperfections. The best tools in 2025 understand this. They add gentle pauses, slight pitch variations, and natural pacing that feels like a conversation, not a performance.

Identity stability — When you’re creating a series or building a brand voice, consistency matters. The voice shouldn’t shift personalities between episodes.

Adaptive pacing — This is where most tools still stumble. Can the voice slow down for reflection? Speed up for excitement? The best AI voice generator of real people 2025 knows when to breathe.

The Best AI Voice Generators for Real Human Sound

ElevenLabs: When Emotion Matters Most

Every time I return to ElevenLabs, I’m reminded why it leads. Industry testing confirms that “no one can compete with ElevenLabs” for realistic voice quality, and my experience echoes this.

What I notice:

- Warmth without artifice — The voices feel present but not theatrical

- Pause intelligence — It knows where silence carries meaning

- Multilingual emotion — Support for 29 languages with native speaker accents maintains consistent quality across languages

Reality check: Users report actual costs running 2.2-2.8x advertised rates due to regenerations. Budget accordingly. The free tier gives you 10,000 characters monthly—enough for testing, but professional work needs paid plans starting at $5/month.

Best for: Documentary narrators, YouTube storytellers, audiobook creators who need voices that carry emotional weight across long passages.

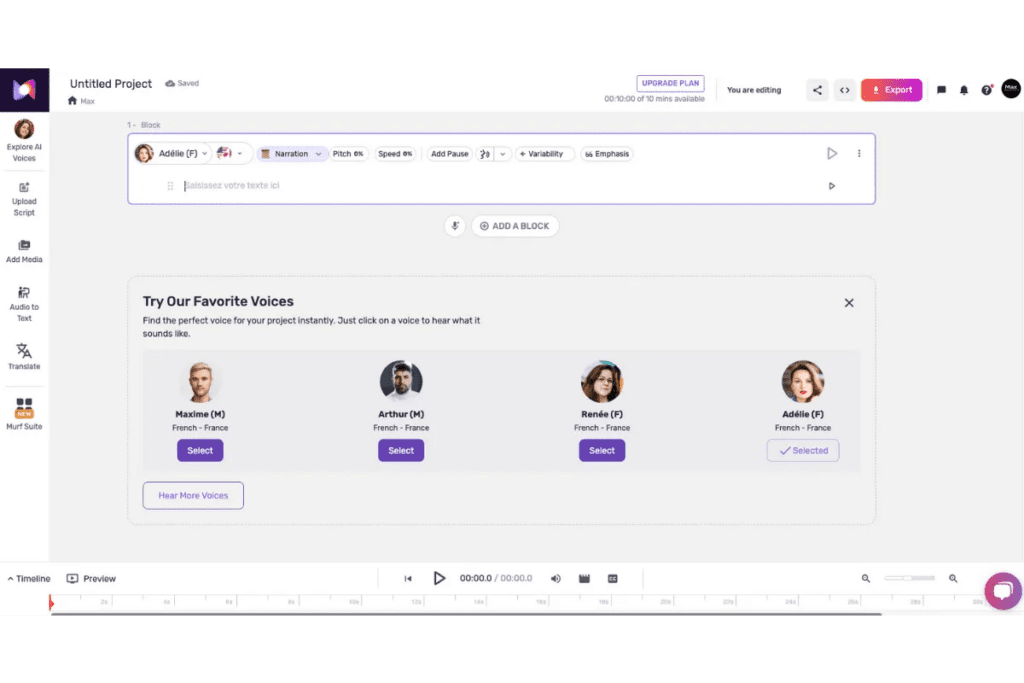

Murf AI: Steady Across Languages

Murf is the reliable friend in your toolkit. With 200+ voices across 35+ languages and 99.38% pronunciation accuracy, it handles technical terms and multilingual projects with calm consistency.

The voice texture is slightly more reserved—think professional presenter rather than intimate storyteller. For corporate training, product explainers, or educational content where clarity trumps character, this restraint works beautifully.

Technical note: Murf uses IPA (International Phonetic Alphabet) for authentic accents and brand-specific pronunciation—a small detail that matters when brand names appear throughout your script.

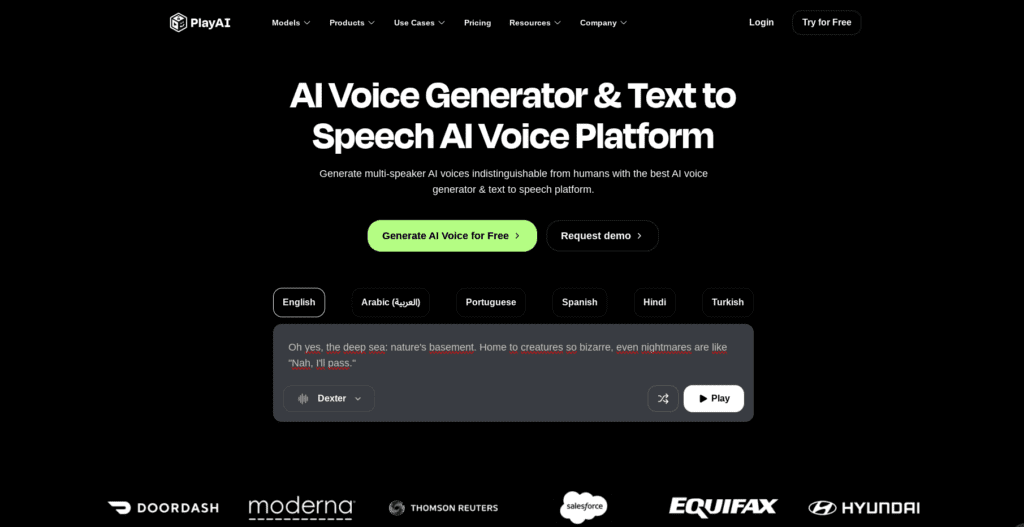

Play.ht: Speed Meets Expression

For teams moving fast, Play.ht delivers. The platform offers 140+ languages and 800+ styles with adjustable delivery tones including whispering, anger, and friendliness.

I appreciate the speed, but watch the emotional edges—sometimes the voice tries too hard. Use gentler emotion settings and let the words breathe naturally.

Speechify: The Reader’s Choice

Speechify understands rhythm. It treats text like music, respecting commas and paragraph breaks with readerly patience. For long-form narration—podcasts, newsletters, educational modules—it maintains momentum without rushing.

Accessibility insight: Speechify supports 200+ voices in 60+ languages, with features specifically designed for users with dyslexia, ADHD, or vision impairments.

How These Voices Actually Work

The technology is elegant in its simplicity: neural networks trained on human speech patterns learn to replicate tone, pitch, and emotion through deep learning.

But here’s what that means for your work:

Voice cloning needs clean audio — Professional-quality recordings are essential: quiet room, quality microphone, consistent distance, no background noise, and varied emotional samples. Give the AI poor recordings, and you’ll hear it in every generated line.

Punctuation is your director — I’ve learned to think of commas as breath marks and periods as full pauses. Short sentences create weight. Long flowing sentences invite the voice forward.

Real-World Testing: What Actually Happens

I ran each platform through the same 200-word script—mixing technical terms, emotional moments, and quick dialogue. Here’s what I heard:

ElevenLabs: Handled emotional shifts naturally but occasionally over-emphasized exclamation points. Solution: remove the exclamation, trust the words.

Murf: Steady across the entire read, but the tender moment felt slightly clinical. Perfect for professional contexts, less ideal for intimate storytelling.

Play.ht: Fast generation, expressive delivery, but pronunciation of brand names required manual correction using their phonetic tools.

Speechify: Beautiful pacing on the narrative sections. Struggled slightly with rapid back-and-forth dialogue.

Choosing Your Voice Partner

Start here:

Test with your actual content — Not sample text. Use 20 seconds of your real script including one playful line, one reflective moment, and one technical term.

Listen for presence — Does the voice feel like it’s in the room with you, or does it feel like it’s being broadcast from somewhere distant?

Check identity across moods — Generate the same sentence five times. How much does it vary? Some variance is human; too much is unstable.

Budget reality — According to DemandSage’s testing, most tools offer free tiers for testing, but professional use requires paid plans. Factor in regeneration costs.

What’s Coming Next

The 2025 landscape is moving toward presence over perfection. Recent developments include emotion control that maintains realistic vocal performance and hybrid waveform-MIDI editors for precise articulation.

I’m watching for:

- Gentler breath sounds that feel human without being distracting

- Better emotional coherence across chapter-length narratives

- Tools that understand the relationship between visual tone and voice pacing

The Bottom Line

The best AI voice generator for real people in 2025 is the one that disappears into your story.

Choose ElevenLabs if emotional nuance is non-negotiable and you can budget for quality. Real creators have built successful channels generating millions of views using its natural-sounding voices.

Choose Murf if you need multilingual consistency and professional clarity across large-scale projects.

Choose Play.ht if speed and tonal variety matter more than subtle emotional gradation.

Choose Speechify if your content is long-form reading or accessibility is a core requirement.

The technology has reached a quiet threshold: audiences no longer question whether a voice is AI. They only notice if it serves the story. That’s the standard we’re working with now—not perfect simulation, but emotional connection.

When the voice feels warm enough to trust, your audience won’t wonder how it was made. They’ll just lean in.

Related resources: For visual storytelling needs, explore our guides on AI video generationand voice cloning best practices. All testing data current as of December 2025.